CS295J/Research proposal (draft 2)

Introduction

- Owners: Adam Darlow, Eric Sodomka, Trevor

Propose: The design, application and evaluation of a novel, cognition-based, computational framework for assessing interface design and providing automated suggestions to optimize usability.

Evaluation Methodology: Our techniques will be evaluated quantitatively through a series of user-study trials, as well as qualitatively by a team of expert interface designers.

Contributions and Significance: We expect this work to make the following contributions:

- design-space analysis and quantitative evaluation of cognition-based techniques for assessing user interfaces.

- design and quantitative evaluation of techniques for suggesting optimized interface-design changes.

- an extensible, multimodal software architecture for capturing user traces integrated with pupil-tracking data, auditory recognition, and muscle-activity monitoring.

- specification (language?) of how to define an interface evaluation module and how to integrate it into a larger system.

- (there may be more here, like testing different cognitive models, generating a markup language to represent interfaces, maybe even a unique metric space for interface usability)

--

We propose a framework for interface evaluation and recommendation that integrates behavioral models and design guidelines from both cognitive science and HCI. Our framework behaves like a committee of specialized experts, where each expert provides its own assessment of the interface, given its particular knowledge of HCI or cognitive science. For example, an expert may provide an evaluation based on the GOMS method, Fitts's law, Maeda's design principles, or cognitive models of learning and memory. An aggregator collects all of these assessments and weights the opinions of each expert, and outputs to the developer a merged evaluation score and a weighted set of recommendations.

Systematic methods of estimating human performance with computer interfaces are used only sparsely despite their obvious benefits, the reason being the overhead involved in implementing them. In order to test an interface, both manual coding systems like the GOMS variations and user simulations like those based on ACT-R/PM and EPIC require detailed pseudo-code descriptions of the user workflow with the application interface. Any change to the interface then requires extensive changes to the pseudo-code, a major problem because of the trial-and-error nature of interface design. Updating the models themselves is even more complicated. Even an expert in CPM-GOMS, for example, can't necessarily adapt it to take into account results from new cognitive research.

Our proposal makes automatic interface evaluation easier to use in several ways. First of all, we propose to divide the input to the system into three separate parts, functionality, user traces and interface. By separating the functionality from the interface, even radical interface changes will require updating only that part of the input. The user traces are also defined over the functionality so that they too translate across different interfaces. Second, the parallel modular architecture allows for a lower "entry cost" for using the tool. The system includes a broad array of evaluation modules some of which are very simple and some more complex. The simpler modules use only a subset of the input that a system like GOMS or ACT-R would require. This means that while more input will still lead to better output, interface designers can get minimal evaluations with only minimal information. For example, a visual search module may not require any functionality or user traces in order to determine whether all interface elements are distinct enough to be easy to find. Finally, a parallel modular architecture is much easier to augment with relevant cognitive and design evaluations.

Background / Related Work

Each person should add the background related to their specific aims.

- Steven Ellis - Cognitive models of HCI, including GOMS variations and ACT-R

- EJ - Design Guidelines

- Jon - Perception and Action

- Andrew - Multiple task environments

- Gideon - Cognition and dual systems

- Ian - Interface design process

- Trevor - User trace collection methods (especially any eye-tracking, EEG, ... you want to suggest using)

Cognitive Models

I plan to port over most of the background on cognitive models of HCI from the old proposal

Additions will comprise of:

- CPM-GOMS as a bridge from GOMS architecture to the promising procedural optimization of the Model Human Processor

- Context of CPM development, discuss its relation to original GOMS and KLM

- Establish the tasks which were relevant for optimization when CPM was developed and note that its obsolescence may have been unavoidable

- Focus on CPM as the first step in transitioning from descriptive data, provided by mounting efforts in the cognitive sciences realm to discover the nature of task processing and accomplishment, to prescriptive algorithms which can predict an interface’s efficiency and suggest improvements

- CPM’s purpose as an abstraction of cognitive processing – a symbolic representation not designed for accuracy but precision

- CPM’s successful trials, e.g. Ernestine

- Implications of this project include CPM’s ability to accurately estimate processing at a psychomotor level

- Project does suggest limitations, however, when one attempts to examine more complex tasks which involve deeper and more numerous cognitive processes

- Context of CPM development, discuss its relation to original GOMS and KLM

- ACT-R as an example of a progressive cognitive modeling tool

- A tool clearly built by and for cognitive scientists, and as a result presents a much more accurate view of human processing – helpful for our research

- Built-in automation, which now seems to be a standard feature of cognitive modeling tools

- Still an abstraction of cognitive processing, but makes adaptation to cutting-edge cognitive research findings an integral aspect of its modular structure

- Expand on its focus on multi-tasking, taking what was a huge advance between GOMS and its CPM variation and bringing the simulation several steps closer to approximating the nature of cognition in regards to HCI

- Far more accessible both for researchers and the lay user/designer in its portability to LISP, pre-construction of modules representing cognitive capacities and underlying algorithms modeling paths of cognitive processing

Design guidelines

A multitude of rule sets exist for the design of not only interface, but architecture, city planning, and software development. They can range in scale from one primary rule to as many Christopher Alexander's 253 rules for urban environments,[1] which he introduced with the concept design patterns in the 1970s. Study has likewise been conducted on the use of these rules:[2] guidelines are often only partially understood, indistinct to the developer, and "fraught" with potential usability problems given a real-world situation.

Application to AUE

And yet, the vast majority of guideline sets, including the most popular rulesets, have been arrived at heuristically. The most successful, such as Raskin's and Schneiderman's, have been forged from years of observation instead of empirical study and experimentation. The problem is similar to the problem of circular logic faced by automated usability evaluations: an automated system is limited in the suggestions it can offer to a set of preprogrammed guidelines which have often not been subjected to rigorous experimentation.[3] In the vast majority of existing studies, emphasis has traditionally been placed on either the development of guidelines or the application of existing guidelines to automated evaluation. A mutually-reinforcing development of both simultaneously has not been attempted.

Overlap between rulesets is inevitable and unavoidable. For our purposes of evaluating existing rulesets efficiently, without extracting and analyzing each rule individually, it may be desirable to identify the the overarching principles or philosophy (max. 2 or 3) for a given ruleset and determining their quantitative relevance to problems of cognition.

Popular and seminal examples

Schneiderman's Eight Golden Rules date to 1987 and are arguably the most-cited. They are heuristic, but can be somewhat classified by cognitive objective: at least two rules apply primarily to repeated use, versus discoverability. Up to five of Schneiderman's rules emphasize predictability in the outcomes of operations and increased feedback and control in the agency of the user. His final rule, paradoxically, removes control from the user by suggesting a reduced short-term memory load, which we can arguably classify as simplicity.

Raskin's Design Rules are classified into five principles by the author, augmented by definitions and supporting rules. While one principle is primarily aesthetic (a design problem arguably out of the bounds of this proposal) and one is a basic endorsement of testing, the remaining three begin to reflect philosophies similar to Schneiderman's: reliability or predictability, simplicity or efficiency (which we can construe as two sides of the same coin), and finally he introduces a concept of uninterruptibility.

Maeda's Laws of Simplicity are fewer, and ostensibly emphasize simplicity exclusively, although elements of use as related by Schneiderman's rules and efficiency as defined by Raskin may be facets of this simplicity. Google's corporate mission statement presents Ten Principles, only half of which can be considered true interface guidelines. Efficiency and simplicity are cited explicitly, aesthetics are once again noted as crucial, and working within a user's trust is another application of predictability.

Elements and goals of a guideline set

Myriad rulesets exist, but variation becomes scarce—it indeed seems possible to parse these common rulesets into overarching principles that can be converted to or associated with quantifiable cognitive properties. For example, it is likely simplicity has an analogue in the short-term memory retention or visual retention of the user, vis a vis the rule of Seven, Plus or Minus Two. Predictability likewise may have an analogue in Activity Theory, in regards to a user's perceptual expectations for a given action; uninterruptibility has implications in cognitive task-switching;[4] and so forth.

Within the scope of this proposal, we aim to reduce and refine these philosophies found in seminal rulesets and identify their logical cognitive analogues. By assigning a quantifiable taxonomy to these principles, we will be able to rank and weight them with regard to their real-world applicability, developing a set of "meta-guidelines" and rules for applying them to a given interface in an automated manner. Combined with cognitive models and multi-modal HCI analysis, we seek to develop, in parallel with these guidelines, the interface evaluation system responsible for their application.

Perception and Action (in progress)

- Information Processing Approach

- Advantages

- Formalism eases translation of theory into scripting language

- Disadvantages

- Assumes symbolic representation

- Advantages

- Ecological (Gibsonian) Approach

- Advantages

- Emphasis on bodily and environmental constraints

- Disadvantages

- Lack of formalism hinders translation of theory into scripting language

- Advantages

Specific Aims and Contributions (to be separated later)

Specific Aims

- Incorporate interaction history mechanisms into a set of existing applications.

- Perform user-study evaluation of history-collection techniques.

- Distill a set of cognitive principles/models, and evaluate empirically?

- Build/buy sensing system to include pupil-tracking, muscle-activity monitoring, auditory recognition.

- Design techniques for manual/semi-automated/automated construction of <insert favorite cognitive model here> from interaction histories and sensing data.

- Design system for posterior analysis of interaction history w.r.t. <insert favorite cognitive model here>, evaluating critical path <or equivalent> trajectories.

- Design cognition-based techniques for detecting bottlenecks in critical paths, and offering optimized alternatives.

- Perform quantitative user-study evaluations, collect qualitative feedback from expert interface designers.

Contributions

- Design-space analysis and quantitative evaluation of cognition-based techniques for assessing user interfaces.

- Design and quantitative evaluation of techniques for suggesting optimized interface-design changes.

- An extensible, multimodal software architecture for capturing user traces integrated with pupil-tracking data, auditory recognition, and muscle-activity monitoring.

- (there may be more here, like testing different cognitive models, generating a markup language to represent interfaces, maybe even a unique metric space for interface usability)

--

See the flowchart for a visual overview of our aims.

In order to use this framework, a designer will have to provide:

- Functional specification - what are the possible interactions between the user and the application. This can be thought of as method signatures, with a name (e.g., setVolume), direction (to user or from user) and a list of value types (boolean, number, text, video, ...) for each interaction.

- GUI specification - a mapping of interactions to interface elements (e.g., setVolume is mapped to the grey knob in the bottom left corner with clockwise turning increasing the input number).

- Functional user traces - sequences of representative ways in which the application is used. Instead of writing them, the designer could have users use the application with a trial interface and then use our methods to generalize the user traces beyond the specific interface (The second method is depicted in the diagram). As a form of pre-processing, the system also generates an interaction transition matrix which lists the probability of each type of interaction given the previous interaction.

- Utility function - this is a weighting of various performance metrics (time, cognitive load, fatigue, etc.), where the weighting expresses the importance of a particular dimension to the user. For example, a user at NASA probably cares more about interface accuracy than speed. By passing this information to our committee of experts, we can create interfaces that are tuned to maximize the utility of a particular user type.

Each of the modules can use all of this information or a subset of it. Our approach stresses flexibility and the ability to give more meaningful feedback the more information is provided. After processing the information sent by the system of experts, the aggregator will output:

- An evaluation of the interface. Evaluations are expressed both in terms of the utility function components (i.e. time, fatigue, cognitive load, etc.), and in terms of the overall utility for this interface (as defined by the utility function). These evaluations are given in the form of an efficiency curve, where the utility received on each dimension can change as the user becomes more accustomed to the interface.

- Suggested improvements for the GUI are also output. These suggestions are meant to optimize the utility function that was input to the system. If a user values accuracy over time, interface suggestions will be made accordingly.

Recording User-Interaction Primitives

- Owner: Trevor O'Brien

Aims

- Develop system for logging low-level user-interactions within existing applications. By low-level interactions, we refer to those interactions for which a quantitative, predictive, GOMS-like model may be generated.

- At the same scale of granularity, integrate explicit interface-interactions with multimodal-sensing data. i.e. pupil-tracking, muscle-activity monitoring, auditory recognition, EEG data, and facial and posture-focused video.

Contributions

- Extensible, multimodal, HCI framework for recording rich interaction-history data in existing applications.

Significance

- Provide rich history data to serve as the basis for novel quantitative interface evaluation.

- Aid expert HCI and visual designers in traditional design processes.

- Provide data for automated machine-learning strategies applied to interaction.

Background and Related Work

- The utility of interaction histories with respect to assessing interface design has been demonstrated in [5].

- In addition, data management histories have been shown effective in the visualization community in [6] [7] [8], providing visualizations by analogy [9] and offering automated suggestions [10], which we expect to generalize to user interaction history.

Semantic-level Interaction Chunking

- Owner: Trevor O'Brien

Aims

- Develop techniques for chunking low-level interaction primitives into semantic-level interactions, given an application's functionality and data-context. (And de-chunking? Invertible mapping needed?)

- Perform user-study evaluation to validate chunking methods.

Contributions

- Design and user-study evaluation of semantic chunking techniques.

Significance

- Low-level <--> semantic-level mapping allows for cognitive-modeling to be applied at a functionality level, where low-level interaction techniques can be swapped out. This will allow our interface assessment system to make feasible suggestions for more optimal interface design.

Background and Related Work

- Chunking interactions has been studied in the HCI community as in [5] Graphical Histories for Visualization: Supporting Analysis, Communication, and Evaluation (InfoVis 2008).

Reconciling Usability Heuristics with Cognitive Theory

- Owner: E J Kalafarski 14:56, 28 April 2009 (UTC)

A weighted framework for the unification of established heuristic usability guidelines and accepted cognitive principles.

Demonstration: Three groups of experts anecdotally apply cognitive principles, heuristic usability principles, and a combination of the two.

- A "cognition expert," given a constrained, limited-functionality interface, develops an independent evaluative value for each interface element based on accepted cognitive principles.

- A "usability expert" develops an independent evaluative value for each interface element based on accepted heuristic guidelines.

- A third expert applies several unified cognitive analogues from a matrix of weighted cognitive and

- User testing demonstrates the assumed efficacy and applicability of the matricized analogues versus independent application of analogued principles.

Dependency: A set of established cognitive principles, selected with an eye toward heuristic analogues.

Dependence: A set of established heuristic design guidelines, selected with an eye toward cognitive analogues.

Evaluation for Recommendation and Incremental Improvement

- Owner: E J Kalafarski 14:56, 28 April 2009 (UTC)

Using a limited set of interface evaluation modules for analysis, we demonstrate, in narrow and controlled manner, the proposed efficiency and accuracy of a method of aggregating individual interface suggestions based on accepted CPM principles (e.g. Fitts' Law and Affordance) and applying them to the incremental improvement of the interface.

Demonstration: A narrow but comprehensive study to demonstrate the efficacy and efficiency of automated aggregation of interface evaluation versus independent, analysis. Shows not total replacement, but a gain in speed of evaluation comparable to the loss of control and feature.

- Given a carefully-constrained interface, perhaps with as few as two buttons and a minimalist feature set, expert interface designer given the individual results of several basic evaluation modules makes recommendations and suggestions to the design of the interface.

- Aggregation meta-module conducts similar survey of module outputs, outputting recommendations and suggestions for improvement of given interface.

- Separate independent body of experts then implements the two sets of suggestions and performs user study on the resultant interfaces, analyzing usability change and comparing it to the time and resources committed by the evaluation expert and the aggregation module, respectively.

Dependency: Module for the analysis of Fitts' Law as it applies to the individual elements of a given interface.

Dependency: Module for the analysis of a Affordance as it applies to the individual elements of a given interface.

Evaluation Metrics

Owner: Gideon Goldin

- Architecture Outputs

- Time (time to complete task)

- Performs as well or better than CPM-GOMS, demonstrated with user tasks

- Dependencies

- CPM-GOMS Module with cognitive load extension

- Cognitive Load

- Predicts cognitive load during tasks, demonstrated with user tasks

- Dependencies

- CPM-GOMS Module with cognitive load extension

- Facial Gesture Recognition Module

- Frustration

- Accurately predicts users' frustration levels, demonstrated with user tasks

- Dependencies

- Facial Gesture Recognition Module

- Galvanic Skin Response Module

- Interface Efficiency Module

- Aesthetic Appeal

- Analyzes if the interface is aesthetically unpleasing, demonstrated with user tasks

- Dependencies

- Aesthetics Module

- Simplicity

- Analyzes how simple the interface is, demonstrated with user tasks

- Dependencies

- Interface Efficiency Module

Parallel Framework for Evaluation Modules

- Owner: Adam Darlow, Eric Sodomka

This section will describe in more detail the inputs, outputs and architecture that were presented in the introduction.

Contributions

- Create a framework that provides better interface evaluations than currently existing techniques, and a module weighting system that provides better evaluations than any of its modules taken in isolation.

- Demonstrate by running user studies and comparing this performance to expected performance, as given by the following interface evaluation methods:

- Traditional, manual interface evaluation

- As a baseline.

- Using our system with a single module

- "Are any of our individual modules better than currently existing methods of interface evaluation?".

- Using our system with multiple modules, but have aggregator give a fixed, equal weighting to each module

- As a baseline for our aggregator: want to show that the value of adding the dynamic weighting.

- Using our system with multiple modules, and allow the aggregator to adjust weightings for each module, but have each module get a single weighting for all dimensions (time, fatigue, etc.)

- For validating the use of a dynamic weighting system.

- Using our system with multiple modules, and allow the aggregator to adjust weightings for each module, and allow the module to give different weightings to every dimension of the module (time, fatigue, etc.)

- For validating the use of weighting across multiple utility dimensions.

- Traditional, manual interface evaluation

- Dependencies: Having a good set of modules to plug into the framework.

Module Inputs (Incomplete)

- A set of utility dimensions {d1, d2, ...} are defined in the framework. These could be {d1="time", d2="fatigue", ...}

- A set of interaction functions. These specify all of the information that the application wants to give users or get from them. It is not tied to a specific interface. For example, the fact that an applications shows videos would be included here. Whether it displays them embedded, in a pop-up window or fullscreen would not.

- A mapping of interaction functions to interface elements (e.g., buttons, windows, dials,...). Lots of optional information describing visual properties, associated text, physical interactions (e.g., turning the dial clockwise increases the input value) and timing.

- Functional user traces - sequences of interaction functions that represent typical user interactions with the application. Could include a goal hierarchy, in which case the function sequence is at the bottom of the hierarchy.

Module Outputs

- Every module ouputs at least one of the following:

- An evaluation of the interface

- This can be on any or all of the utility dimensions, e.g. evaluation={d1=score1, d2=score2, ...}

- This can alternately be an overall evaluation, ignoring dimensions, e.g. evaluation={score}

- In this case, the aggregator will treat this as the module giving the same score to all dimensions. Which dimension this evaluator actually predicts well on can be learned by the aggregator over time.

- Recommendation(s) for improving the interface

- This can be a textual description of what changes the designer should make

- This can alternately be a transformation that can automatically be applied to the interface language (without designer intervention)

- In addition to the textual or transformational description of the recommendation, a "change in evaluation" is output to describe how specifically the value will improve the interface

- Recommendation = {description="make this change", Δevaluation={d1=score1, d2=score2, ...}

- Like before, this Δevaluation can cover any number of dimensions, or it can be generic.

- Either a single recommendation or a set of recommendations can be output

- An evaluation of the interface

Aggregator Inputs

The aggregator receives as input the outputs of all the modules.

Aggregator Outputs

Outputs for the aggregator are the same as the outputs for each module. The difference is that the aggregator will consider all the module outputs, and arrive at a merged output based on the past performance of each of the modules.

Evaluation and Recommendation via Modules

- Owner: E J Kalafarski

A "meta-module" called the aggregator will be responsible for assembling and formatting the output of all other modules into a structure that is both extensible and immediately usable, by both an automated designer or a human designer.

Requirements

The aggregator's functionality, then, is defined by its inputs, the outputs of the other modules, and the desired output of the system as a whole, per its position in the architecture. Its purpose is largely formatting and reconciliation of the products of the multitudinous (and extensible) modules. The output of the aggregator must meet several requirements: first, to generate a set of human-readable suggestions for the improvement of the given interface; second, to generate a machine-readable, but also analyzable, evaluation of the various characteristics of the interface and accompanying user traces.

From these specifications, it is logical to assume that a common language or format will be required for the output of individual modules. We propose an XML-based file format, allowing: (1) a section for the standardized identification of problem areas, applicable rules, and proposed improvements, generalized by the individual module and mapped to a single element, or group of elements, in the original interface specification; (2) a section for specification of generalizable "utility" functions, allowing a module to specify how much a measurable quantity of utility is positively or negatively affected by properties of the input interface; (3) new, user-definable sections for evaluations of the given interface not covered by the first two sections. The first two sections are capable of conveying the vast majority of module outputs predicted at this time, but the XML can extensibly allow modules to pass on whatever information may become prominent in the future.

Specification

<module id="Fitts-Law"> <interface-elements> <element> <desc>submit button</desc> <problem> <desc>size</desc> <suggestion>width *= 2</suggestion> <suggestion>height *= 2</suggestion> <human-suggestion>Increase size relative to other elements</human-suggestion> </problem> </element> </interface-elements> <utility> <dimension> <desc>time</desc> <value>0:15:35</value> </dimension> <dimension> <desc>frustration</desc> <value>pulling hair out</value> </dimension> <dimension> <desc>efficiency</desc> <value>13.2s/KPM task</value> <value>0.56m/CPM task</value> </dimension> </utility> <tasks> <task> <desc>complete form</desc> </task> <task> <desc>lookup SSN</desc> </task> <task> <desc>format phone number</desc> </task> </tasks> </module>

Logic

This file provided by each module is then the input for the aggregator. The aggregator's most straightforward function is the compilation of the "problem areas," assembling them and noting problem areas and suggestions that are recommended by more than one module, and weighting them accordingly in its final report. These weightings can begin in an equal state, but the aggregator should be capable of learning iteratively which modules' results are most relevant to the user and update weightings accordingly. This may need to be accomplished with manual tuning, or a machine-learning algorithm capable of determining which modules most often agree with others.

Secondly, the aggregator compiles the utility functions provided by the module specs. This, again, is a summation of similarly-described values from the various modules.

When confronted with user-defined sections of the XML spec, the aggregator is primarily responsible for compiling them and sending them along to the output of the machine. Even if the aggregator does not recognize a section or property of the evaluative spec, if it sees the property reported by more than one module it should be capable of aggregating these intelligently. In future versions of the spec, it should be possible for a module to provide instructions for the aggregator on how to handle unrecognized sections of the XML.

From these compilations, then, the aggregator should be capable of outputting both aggregated human-readable suggestions on interface improvements for a human designer, as well as a comprehensive evaluation of the interface's effectiveness at the given task traces. Again, this is dependent on the specification of the system as a whole, but is likely to include measures and comparisons, graphings of task versus utility, and quantitative measures of an element's effectiveness.

This section is necessarily defined by the output of the individual modules (which I already expect to be of varied and arbitrary structure) and the desired output of the machine as a whole. It will likely need to be revised heavily after other modules and the "Parellel Framework" section are defined. E J Kalafarski 12:34, 24 April 2009 (UTC)

This section describes the aggregator, which takes the output of multiple independent modules and aggregates the results to provide (1) an evaluation and (2) recommendations for the user interface. We should explain how the aggregator weights the output of different modules (this could be based on historical performance of each module, or perhaps based on E.J.'s cognitive/HCI guidelines).

Sample Modules

CPM-GOMS

- Owners: Steven Ellis

This module will provide interface evaluations and suggestions based on a CPM-GOMS model of cognition for the given interface. It will provide a quantitative, predictive, cognition-based parameterization of usability. From empirically collected data, user trajectories through the model (critical paths) will be examined, highlighting bottlenecks within the interface, and offering suggested alterations to the interface to induce more optimal user trajectories.

I’m hoping to have some input on this section, because it seems to be the crux of the “black box” into which we take the inputs of interface description, user traces, etc. and get our outputs (time, recommendations, etc.). I know at least a few people have pretty strong thoughts on the matter and we ought to discuss the final structure.

That said – my proposal for the module:

- In my opinion the concept of the Model Human Processor (at least as applied in CPM) is outdated – it’s too economic/overly parsimonious in its conception of human activity. I think we need to create a structure which accounts for more realistic conditions of HCI including multitasking, aspects of distributed cognition (and other relevant uses of tools – as far as I can tell CPM doesn’t take into account any sort of productivity aids), executive control processes of attention, etc. ACT-R appears to take steps towards this but we would probably need to look at their algorithms to know for sure.

- Critical paths will continue to play an important role – we should in fact emphasize that part of this tool’s purpose will be a description not only of ways in which the interface should be modified to best fit a critical path, but also ways in which the user’s ought to be instructed in their use of the path. This feedback mechanism could be bidirectional – if the model’s predictions of the user’s goals are incorrect, the critical path determined will also be incorrect and the interface inherently suboptimal. The user could be prompted with a tooltip explaining in brief why and how the interface has changed, along with options to revert, select other configurations (euphemized by goals), and to view a short video detailing how to properly use the interface.

- Call me crazy but, if we assume designers will be willing to code a model of their interfaces into our ACT-R-esque language, could we allow that model to be fairly transparent to the user, who could use a gui to input their goals to find an analogue in the program which would subsequently rearrange its interface to fit the user’s needs? Even if not useful to the users, such dynamic modeling could really help designers (IMO)

- I think the model should do its best to accept models written for ACT-R and whatever other cognitive models there are out there – gives us the best chance of early adoption

- I would particularly appreciate input on the number/complexity/type of inputs we’ll be using, as well as the same qualities for the output.

HCI Guidelines

- Owner: E J Kalafarski

Schneiderman's Eight Golden Rules and Jakob Nielsen's Ten Heuristics are perhaps the most famous and well-regarded heuristic design guidelines to emerge over the last twenty years. Although the explicit theoretical basis for such heuristics is controversial and not well-explored, the empirical success of these guidelines is established and accepted. This module will parse out up to three or four common (that is, intersecting) principles from these accepted guidelines and apply them to the input interface.

As an example, we identify an analogous principle that appears in Schneiderman ("Reduce short-term memory load")[11] and Nielsen ("Recognition rather than recall/Minimize the user's memory load")[12]. The input interface is then evaluated for the consideration of the principle, based on an explicit formal description of the interface, such as XAML or XUL. The module attempts to determine how effectively the interface demonstrates the principle. When analyzing an interface for several principles that may be conflicting or opposing in a given context, the module makes use of a hard-coded but iterative (and evolving) weighting of these principles, based on (1) how often they appear in the training set of accepted sets of guidelines, (2) how analogues a heuristic principle is to a cognitive principle in a parallel training set, and (3) how effective the principle's associated suggestion is found to be using a feedback mechanism.

Inputs

- A formal description of the interface and its elements (e.g. buttons).

- A formal description of a particular task and the possible paths through a subset of interface elements that permit the user to accomplish that task.

Output

Standard XML-formatted file containing problem areas of the input interface, suggestions for each problem area based on principles that were found to have a strong application to a problem element and the problem itself, and a human-readable generated analysis of the element's affinity for the principle. Quantitative outputs will not be possible based on heuristic guidelines, and the "utility" section of this module's output is likely to be blank.

This section could include an example or two of established design guidelines that could easily be implemented as modules.

Fitts's Law

- Owner: Jon Ericson

This module provides an estimate of the required time to complete various tasks that have been decomposed into formalized sequences of interactions with interface elements, and will provide evaluations and recommendations for optimizing the time required to complete those tasks using the interface.

Inputs

1. A formal description of the interface and its elements (e.g. buttons).

2. A formal description of a particular task and the possible paths through a subset of interface elements that permit the user to accomplish that task.

3. The physical distances between interface elements along those paths.

4. The width of those elements along the most likely axes of motion.

5. Device (e.g. mouse) characteristics including start/stop time and the inherent speed limitations of the device.

Output

The module will then use the Shannon formulation of Fitt's Law to compute the average time needed to complete the task along those paths.

Affordances

- Owner: Jon Ericson

This simple module will provide interface evaluations and recommendations based on a measure of the extent to which the user perceives the relevant affordances of the interface when performing a number of specified tasks.

Inputs

Formalized descriptions of...

1. Interface elements

2. Their associated actions

3. The functions of those actions

4. A particular task

5. User traces for that task.

Inputs (1-4) are then used to generate a "user-independent" space of possible functions that the interface is capable of performing with respect to a given task -- what the interface "affords" the user. From this set of possible interactions, our model will then determine the subset of optimal paths for performing a particular task. The user trace (5) is then used to determine what functions actually were performed in the course of a given task of interest and this information is then compared to the optimal path data to determine the extent to which affordances of the interface are present but not perceived.

Output

The output of this module is a simple ratio of (affordances perceived) / [(relevant affordances present) * (time to complete task)] which provides a quantitative measure of the extent to which the interface is "natural" to use for a particular task.

Workflow, Multi-tasking and Interruptions

- Owner: Andrew Bragdon

Assignment 13

- Scientific Study of Multi-tasking Workflow and the Impact of Interruptions

- We will undertake detailed studies to help understand the following questions:

- How does the size of a user's working set impact interruption resumption time?

- How does the size of a user's working set, when used for rapid multi-tasking, impact performance metrics?

- How does a user interface which supports multiple simultaneous working sets benefit interruption resumption time?

- No Dependencies

- Meta-work Assistance Tool

- We will perform a series of ecologically-valid studies to compare user performance between a state of the art task management system (control group) and our meta-work assistance tool (experimental group)

- Dependent on core study completion, as some of the specific design decisions will be driven by the results of this study. However, it is worth pointing out that this separate contribution can be researched in parallel to the core study.

- Baseline Comparison Between Module-based Model of HCI and Core Multi-tasking Study

- We will compare the results of the above-mentioned study in multi-tasking against results predicted by the module-based model of HCI in this proposal; this will give us an important baseline comparison, particularly given that multi-tasking and interruption involve higher brain functioning and are therefore likely difficult to predict

- Dependent on core study completion, as well as most of the rest of the proposal being completed to the point of being testable

Text for Assignment 12:

Add text here about how this can be used to evaluate automatic framework

There are, at least, two levels at which users work (Gonzales, et al., 2004). Users accomplish individual low-level tasks which are part of larger working spheres; for example, an office worker might send several emails, create several Post-It (TM) note reminders, and then edit a word document, each of these smaller tasks being part of a single larger working sphere of "adding a new section to the website." Thus, it is important to understand this larger workflow context - which often involves extensive levels of multi-tasking, as well as switching between a variety of computing devices and traditional tools, such as notebooks. In this study it was found that the information workers surveyed typically switch individual tasks every 2 minutes and have many simultaneous working spheres which they switch between, on average every 12 minutes. This frenzied pace of switching tasks and switching working spheres suggests that users will not be using a single application or device for a long period of time, and that affordances to support this characteristic pattern of information work are important.

The purpose of this module is to integrate existing work on multi-tasking, interruption and higher-level workflow into a framework which can predict user recovery times from interruptions. Specifically, the goals of this framework will be to:

- Understand the role of the larger workflow context in user interfaces

- Understand the impact of interruptions on user workflow

- Understand how to design software which fits into the larger working spheres in which information work takes place

It is important to point out that because workflow and multi-tasking rely heavily on higher-level brain functioning, it is unrealistic within the scope of this grant to propose a system which can predict user performance given a description of a set of arbitrary software programs. Therefore, we believe this module will function much more in a qualitative role to provide context to the rest of the model. Specifically, our findings related to interruption and multi-tasking will advance the basic research question of "how do you users react to interruptions when using working sets of varying sizes?". This core HCI contribution will help to inform the rest of the outputs of the model in a qualitative manner.

Inputs

N/A

Outputs

N/A

Working Memory Load

- Owner: Gideon Goldin

This module measures how much information the user needs to retain in memory while interacting with the interface and makes suggestions for improvements.

Inputs

- Visual Stimuli

- Audio Stimuli

Outputs

- Remembered percepts

- Half-Life of percepts

Automaticity of Interaction

- Owner: Gideon Goldin

Measures how easily the interaction with the interface becomes automatic with experience and makes suggestions for improvements.

Inputs

- Interface

- User goals

Outputs

- Learning curve

Anti-Pattern Conflict Resolution

- Owner: Ian Spector

Interface design patterns are defined as reusable elements which provide solutions to common problems. For instance, we expect that an arrow in the top left corner of a window which points to the left, when clicked upon, will take the user to a previous screen. Furthermore, we expect that buttons in the top left corner of a window will in some way relate to navigation.

An anti-pattern is a design pattern which breaks standard design convention, creating more problems than it solves. An example of an anti-pattern would be if in place of the 'back' button on your web browser, there would be a 'view history' button.

The purpose of this module would be to analyze an interface to see if any anti-patterns exist, identify where they are in the interface, and then suggest alternatives.

Inputs

- Formal interface description

- Tasks which can be performed within the interface

- A library of standard design patterns

- Outputs from the 'Affordances' module

- Uncommon / Custom additional pattern library (optional)

Outputs

- Identification of interface elements whose placement or function are contrary to the pattern library

- Recommendations for alternative functionality or placement of such elements.

Integration into the Design Process

- Owner: Ian Spector

This section outlines the process of designing an interface and at what stages our proposal fits in and how.

The interface design process is critical to the creation of a quality end product. The process of creating an interface can also be used as a model for analyzing a finished one.

There are a number of different philosophies on how to best design software and in turn, interface. Currently, agile development using an incremental process such as Scrum has become a well known and generally practiced procedure.

The steps to create interfaces varies significantly from text to text, although the Common Front Group at Cornell has succinctly been able to reduce this variety into six simple steps:

- Requirement Sketching

- Conceptual Design

- Logical Design

- Physical Design

- Construction

- Usability Testing

This can be broken down further into just information architecture design followed by physical design and testing.

Preliminary Results

Workflow, Multi-tasking, and Interruption

I. Goals

The goals of the preliminary work are to gain qualitative insight into how information workers practice metawork, and to determine whether people might be better-supported with software which facillitates metawork and interruptions. Thus, the preliminary work should investigate, and demonstrate, the need and impact of the core goals of the project.

II. Methodology

Seven information workers, ages 20-38 (5 male, 2 female), were interviewed to determine which methods they use to "stay organized". An initial list of metawork strategies was established from two pilot interviews, and then a final list was compiled. Participants then responded to a series of 17 questions designed to gain insight into their metawork strategies and process. In addition, verbal interviews were conducted to get additional open-ended feedback.

III. Final Results

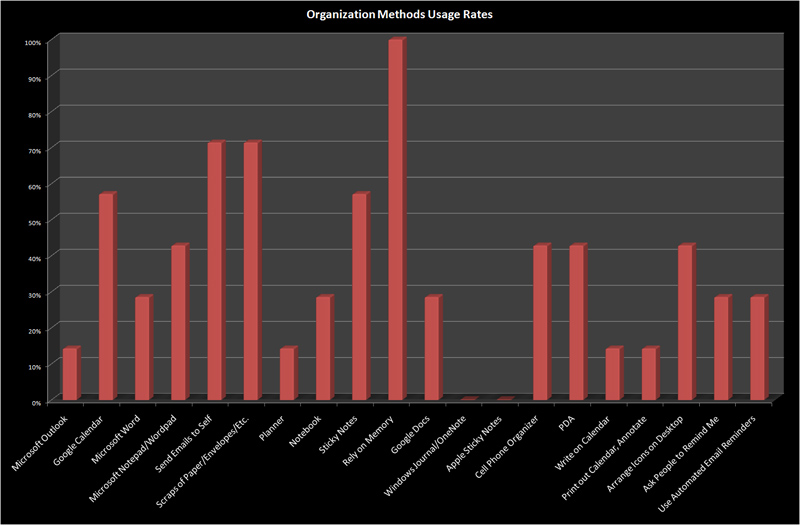

A histogram of methods people use to "stay organized" in terms of tracking things they need to do (TODOs), appointments and meetings, etc. is shown in the figure below.

In addition to these methods, participants also used a number of other methods, including:

- iCal

- Notes written in xterms

- "Inbox zero" method of email organization

- iGoogle Notepad (for tasks)

- Tag emails as "TODO", "Important", etc.

- Things (Organizer Software)

- Physical items placed to "remind me of things"

- Sometimes arranging windows on desk

- Keeping browser tabs open

- Bookmarking web pages

- Keep programs/files open scrolled to certain locations sometimes with things selected

In addition, three participants said that when interrupted they "rarely" or "very rarely" were able to resume the task they were working on prior to the interruption. Three of the participants said that they would not actively recommend their metawork strategies for other people, and two said that staying organized was "difficult".

Four participants were neutral to the idea of new tools to help them stay organized and three said that they would like to have such a tool/tools.

IV. Discussion

These results qunatiatively support our hypothesis that there is no clearly dominant set of metawork strategies employed by information workers. This highly fragemented landscape is surprising, even though most information workers work in a similar environment - at a desk, on the phone, in meetings - and with the same types of tools - computers, pens, paper, etc. We believe that this suggests that there are complex tradeoffs between these methods and that no single method is sufficient. We therefore believe that users will be better supported with a new set of software-based metawork tools.

[Criticisms]

- Owner: Andrew Bragdon

Any criticisms or questions we have regarding the proposal can go here.

References

- ↑ Borchers, Jan O. "A Pattern Approach to Interaction Design." 2000.

- ↑ http://stl.cs.queensu.ca/~graham/cisc836/lectures/readings/tetzlaff-guidelines.pdf

- ↑ Ivory, M and Hearst, M. "The State of the Art in Automated Usability Evaluation of User Interfaces." ACM Computing Surveys (CSUR), 2001.

- ↑ Czerwinski, Horvitz, and White. "A Diary Study of Task Switching and Interruptions." Proceedings of the SIGCHI conference on Human factors in computing systems, 2004.

- ↑ 5.0 5.1 Graphical Histories for Visualization: Supporting Analysis, Communication, and Evaluation (InfoVis 2008)

- ↑ Callahan-2006-MED

- ↑ Callahan-2006-VVM

- ↑ Bavoil-2005-VEI

- ↑ Querying and Creating Visualizations by Analogy

- ↑ VisComplete: Automating Suggestions from Visualization Pipelines

- ↑ http://faculty.washington.edu/jtenenbg/courses/360/f04/sessions/schneidermanGoldenRules.html

- ↑ http://www.useit.com/papers/heuristic/heuristic_list.html

- ↑ http://en.wikipedia.org/wiki/Baddeley%27s_model_of_working_memory

- ↑ http://en.wikipedia.org/wiki/The_Magical_Number_Seven,_Plus_or_Minus_Two

- ↑ Cowan, N. (2001). The magical number 4 in short-term memory: A reconsideration of mental storage capacity. Behavioral and Brain Sciences, 24, 87-185.

- ↑ http://en.wikipedia.org/wiki/Chunking_(psychology)

- ↑ http://en.wikipedia.org/wiki/Priming_(psychology)

- ↑ http://en.wikipedia.org/wiki/Subitizing

- ↑ http://en.wikipedia.org/wiki/Learning#Mathematical_models_of_learning

- ↑ http://74.125.95.132/search?q=cache:IZ-Zccsu3SEJ:psych.wisc.edu/ugstudies/psych733/logan_1988.pdf+logan+isntance+teory&cd=1&hl=en&ct=clnk&gl=us&client=firefox-a

- ↑ http://www.sciencedirect.com/science?_ob=ArticleURL&_udi=B6VH9-4SM7PFK-4&_user=10&_rdoc=1&_fmt=&_orig=search&_sort=d&view=c&_acct=C000050221&_version=1&_urlVersion=0&_userid=10&md5=10cd279fa80958981fcc3c06684c09af

- ↑ http://en.wikipedia.org/wiki/Fluency_heuristic