CS295J/Project Schedule:Steve: Difference between revisions

Steven Gomez (talk | contribs) |

Steven Gomez (talk | contribs) |

||

| Line 28: | Line 28: | ||

* [[media:6nov2011-cs295j-report-steveg.pdf]], 19:13, 6 November 2011 (EST) | * [[media:6nov2011-cs295j-report-steveg.pdf]], 19:13, 6 November 2011 (EST) | ||

* [[media:22nov2011-cs295j-report-steveg.pdf]], 12:56, 22 November 2011 (EST) | |||

== Data and Resource Links == | == Data and Resource Links == | ||

Revision as of 17:56, 22 November 2011

Quality assessment (QA) is an important part of the quality control (QC) process. MRI and other imaging methods sometimes have issues like motion artifacts (i.e., if a subject moves during a scan), low signal-to-noise ratio (SNR), or contrast problems that complicate the process of drawing insights from these images. In a perfect world, a given pixel or voxel has an intensity created only by the underlying brain data, but this is not always the case.

This project explores the types of QA involved in accepting or rejecting a scan used for brain research. We can think of multiple scales of QA that usually happen. What we are finding is that there is often an image-based QA, which does not require interpreting the underlying brain data, and a semantics-based QA process that may happen later in the research process.

How do analysts assess scan quality in MRI imaging? How does that inform tool design?

Some directions:

- Trust: Brain imaging inherently presents uncertain data to the researcher/analyst. Part of that uncertainty comes from the imaging method (see the artifacts discussion above). Another part comes from the fact that there is usually no reference data to verify what analysts see (unless the brain is dissected). In cases where the brain structure may be changed due to a clinical case, analysts may not even know a priori what they're looking for. The 'trust' question comes because at some point in the analysis process, the analyst must trust that the tool is providing a correct visual mapping of the underlying data. Suppose a tool interface has an 'ugly' and 'pretty' condition; we hypothesize that the analyst is more likely to trust the pretty interface than the ugly one, and that this might result is spending less time checking for artifacts or otherwise verifying what s/he is seeing.

- Crowdsourcing: If we can separate QA into two process -- one of which requires little or no anatomical knowledge -- can we outsource the image-level assessment task to naive Turkers? How does their scoring compare to that of research assistants on a dataset of scans?

Project Schedule

Nov 1 -- Filling out the paper: Abstract, related work and intro drafted; outlines for results and methods. Update on data and contact with Win.

Nov 8 -- Test MRIs (hopefully 10) collected and organized; evaluation protocol completed

Nov 15 -- Video captures of research assistants analyzing MRIs for QA (first session) Interviews (+recording) with domain scientists about QA workflow

Nov 22 -- continued: Video captures of research assistants analyzing MRIs for QA (second session) cont: Interviews (+recording) with domain scientists about QA workflow

Nov 29 -- Coding/analysis of interview videos completed; will share findings

Dec 6 -- Figures for final paper created

Dec 13 -- Final draft, two-page extended abstract of research project

Report Drafts

- media:6nov2011-cs295j-report-steveg.pdf, 19:13, 6 November 2011 (EST)

- media:22nov2011-cs295j-report-steveg.pdf, 12:56, 22 November 2011 (EST)

Data and Resource Links

- A copy of all imaging data (from 40 subjects) used to build the LONI Probabilistic Brain Atlas is downloaded and available at:

/research/graphics/users/steveg/data/brain_atlases

- STILL NEED -- MRIs with artifacts that make them "poor quality"

Related materials about MRI artifacts

Tutorial from ISMRM about artifacts. To view, id: 64181, pw: Zhou

A Short Overview of MRI Artefacts, Erasmus et al., SA Journal of Radiology, Aug. 2004

Evaluation Protocol

Subjects: 4 research assistants in Win Gongvatana's group who have been previously trained in assessing scan quality.

Data: Each subject will receive a set of 10 T1-weighted MRI scans that have been shuffled in random order. 5 scans will be "normal quality" and taken from the component scans used to build UCLA's LONI Probabilistic Brain Atlas. The other 5 scans will contain artifacts that make them "poor quality". All will be of normal, human brains.

Method: We will use a think-aloud protocol and ask subjects to examine and score all scans in one session. Subjects will use whichever viewing tools they regularly use to assess scan quality. The observer will prompt each subject to explain what s/he is looking for in each image. Video of the subject will be recorded during this process, and the screen will be captured in order to do a post-hoc analysis of time spent per frame.

Questionnaire: After completing the tasks, subjects will be given a questionnaire with the following questions:

- High-quality images

- Please describe the properties or features of a scan that you would typically consider "high quality".

- Are these features obvious or subtle?

- How do you go about visually finding them?

- Low-quality images

- Please describe the properties or features of a scan that you would typically consider "poor quality".

- Are these features obvious or subtle?

- How do you go about visually finding them?

- Current QA process

- Which tools do you currently use to assess quality in MRI scans?

- How much time do you typically spend doing quality checks on an MRI scan?

- How many slices do you typically look at during this process? (Please give both the number and the percentage of total, if not all are examined.)

- Please estimate the ratio of "poor quality" scans to "high quality" scans you come across in your research.

- Are some artifacts more apparent in certain parts of the brain? If so, please explain.

- Do you ever just sample some slices from the scan rather than inspecting all of them? If so, please explain how many slices you usually sample and how you choose those samples.

- Improving the process

- Do you wish the QA process were faster to complete?

- Do you wish the QA process were easier to complete?

- What tools, real or imaginary, would you use to help your QA process if they were available?

Boards

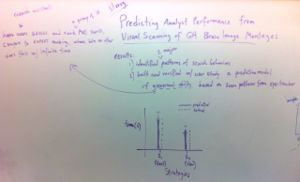

- Poster sketches and in-class critiques

-

Oct 20, 2011

-

Oct 18, 2011

-

Oct 13, 2011

- MTurk HIT Gallery

-

Can we use Turkers to evaluate image quality in MRI scans? Nov 14, 2011